What is ETL?

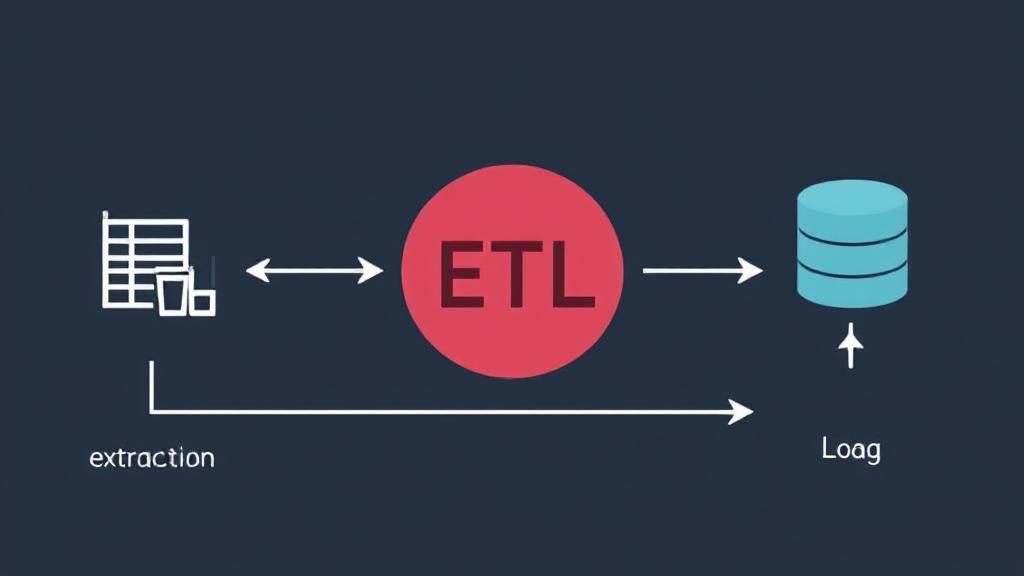

ETL (Extract, Transform, Load) represents three critical steps in moving data from various sources into a target database or data warehouse. This standardized approach ensures data quality, consistency, and usability across organizations looking to make data-driven decisions.

Extract

The extraction phase involves pulling data from multiple source systems while maintaining data integrity and minimal impact on source performance. Common data sources include:

- Relational databases (MySQL, PostgreSQL, Oracle)

- Flat files (CSV, Excel)

- APIs and web services

- Legacy systems

- IoT devices

- Social media platforms

- CRM systems

Key Considerations in Extraction:

- Data Source Variety

- Data Volume

- Data Quality

For more on data extraction techniques, you can refer to this guide on data extraction.

Transform

Transformation is often the most complex phase, where raw data is converted into a format suitable for analysis. Common transformation operations include:

- Data cleaning and validation

- Standardization of formats and units

- Handling missing values

- Deduplication

- Data integration and aggregation

- Key mapping and joining related data

"Garbage in, garbage out. The transformation phase is where we ensure data quality and consistency." - Ralph Kimball

Tools like Apache Spark and Talend are popular for handling data transformation tasks.

Load

The final phase involves writing the transformed data into the target system, such as:

- A data warehouse

- A data mart

- A reporting database

- Cloud storage solutions

Loading Strategies:

- Full Load: Complete replacement of existing data

- Incremental Load: Only new or modified data is loaded

Benefits of ETL

ETL processes are vital for several reasons:

- Data Centralization

- Improved Data Quality

- Enhanced Decision Making

- Reduced Data Redundancy

- Increased Data Integration

Modern ETL Tools and Technologies

Several tools help streamline the ETL process:

-

Open-source tools:

-

Commercial tools:

-

Cloud-based tools:

Best Practices

Planning and Design

- Document data sources and transformations

- Define clear data quality rules

- Plan for scalability

- Consider security requirements

Monitoring and Maintenance

Regular monitoring should include:

| Aspect | Metrics to Track |

|---|---|

| Performance | Processing time, resource usage |

| Quality | Error rates, data accuracy |

| Reliability | Job success rate, system uptime |

The Future of ETL

Modern trends are reshaping traditional ETL:

- ELT (Extract, Load, Transform) gaining popularity with cloud data warehouses

- Real-time streaming replacing batch processing

- AI/ML automation of transformation rules

- DataOps and automated testing integration

ETL remains a crucial component in data integration strategies, evolving with new technologies and methodologies. Organizations must stay current with best practices while adapting to changing business requirements and technological capabilities.